You may also wish to build the package documentation following the instructions in $SPARK_HOME/R/DOCUMENTATION.md.

#Download spark with hadoop linux archive

To connect to Spark from R you must first build the package by runningĪs described in $SPARK_HOME/R/README.md. If you have taken the Hands-on course mentioned above, you can download the NOTES.txt files, examples, and data archive directly to the VM using wget The archive is in both compressed tar (tgz) and Zip (zip) format. Spark has an interactive mode allowing the user more control during job runs. Hadoop can handle very large data in batches proficiently, whereas Spark processes data in real-time such as feeds from Facebook and Twitter. The R package sparkR is distributed with the package but not built during installation. Hadoop is a data processing engine, whereas Spark is a real-time data analyzer. You may need to adjust your PATH environment variable if your shell inhibits /etc/profile.d: Some environment variables are set in /etc/profile.d/apache-spark.sh. It allows other components to run on top of stack. It helps to integrate Spark into Hadoop ecosystem or Hadoop stack. Hadoop Yarn: Hadoop Yarn deployment means, simply, spark runs on Yarn without any pre-installation or root access required.

#Download spark with hadoop linux install

Follow one of the following articles to install Hadoop 3.3.0 on your UNIX-alike system: Install Hadoop 3.3.0 on Linux Install Hadoop 3.3.0 on Windows 10 using WSL (follow this page if you are planning to install it on WSL) OpenJDK 1. Here, Spark and MapReduce will run side by side to cover all spark jobs on cluster. By allowing user programs to load data into a cluster's memory and query it repeatedly, Spark is well-suited to machine learning algorithms. If you choose to download Spark package with pre-built Hadoop, Hadoop 3.3.0 configuration is not required. In contrast to Hadoop's two-stage disk-based MapReduce paradigm, Spark's in-memory primitives provide performance up to 100 times faster for certain applications. I want to set up Hadoop, Spark, and Hive on my personal laptop.

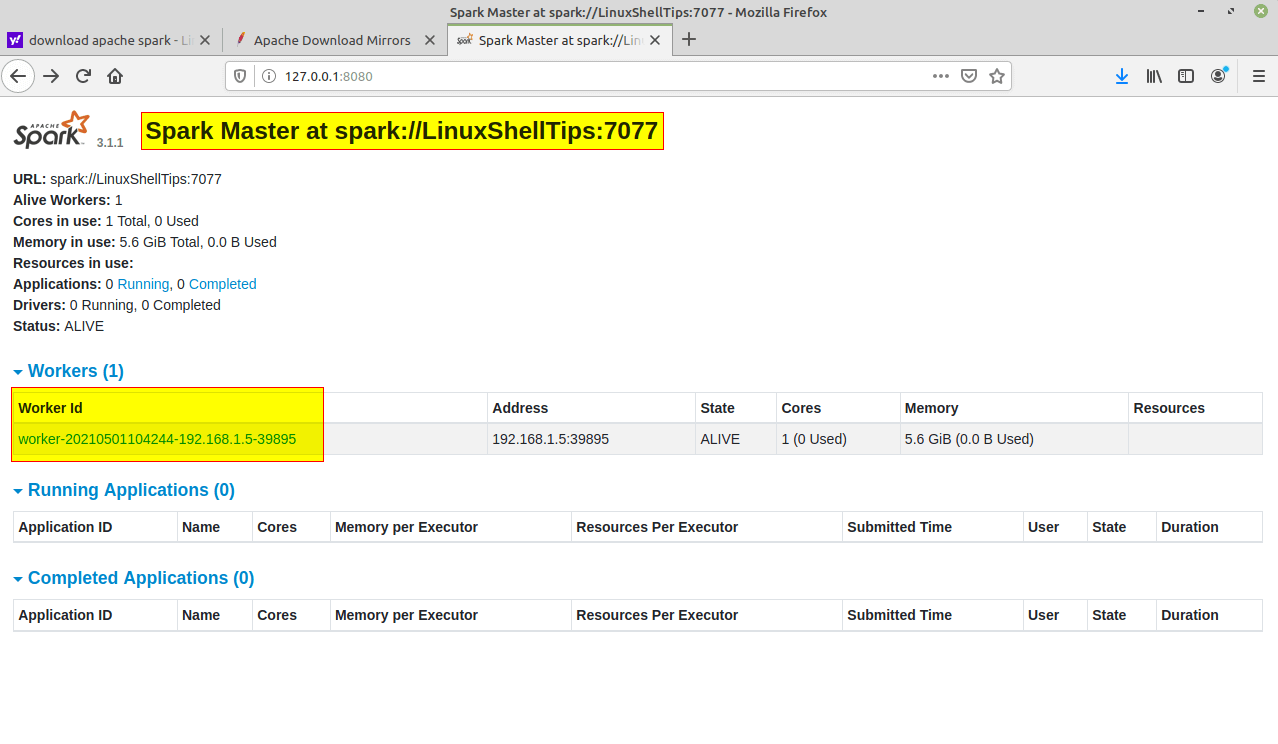

Apache Spark is an open-source cluster computing framework originally developed in the AMPLab at UC Berkeley. Installing Hadoop, Spark, and Hive in Windows Subsystem for Linux (WSL).

0 kommentar(er)

0 kommentar(er)